After bad-mouthing the monolith for a long time while promoting micro-services architecture, we hit the real world wall. Micro-services are not the magic pattern that has been sold for years..

Primarily because most of us focused on the flexibility they give to us while intentionally forgetting to stress out its inherent complexity regarding the weaving phase of the services that one will have to pay back for at the end of the day. Now that we all have our own set of services, we are experiencing it the hard way. Not only is remotely deploying the ecosystem not that straightforward, but in the meanwhile we also lose the scaling down ability. It then becomes even harder to locally deploy the stack in an efficient way. Basically we exchange flexibility for performance. And we are not this flexible while losing performance for real. And it is not a contract we agree upon to sign-off on afterward anymore.

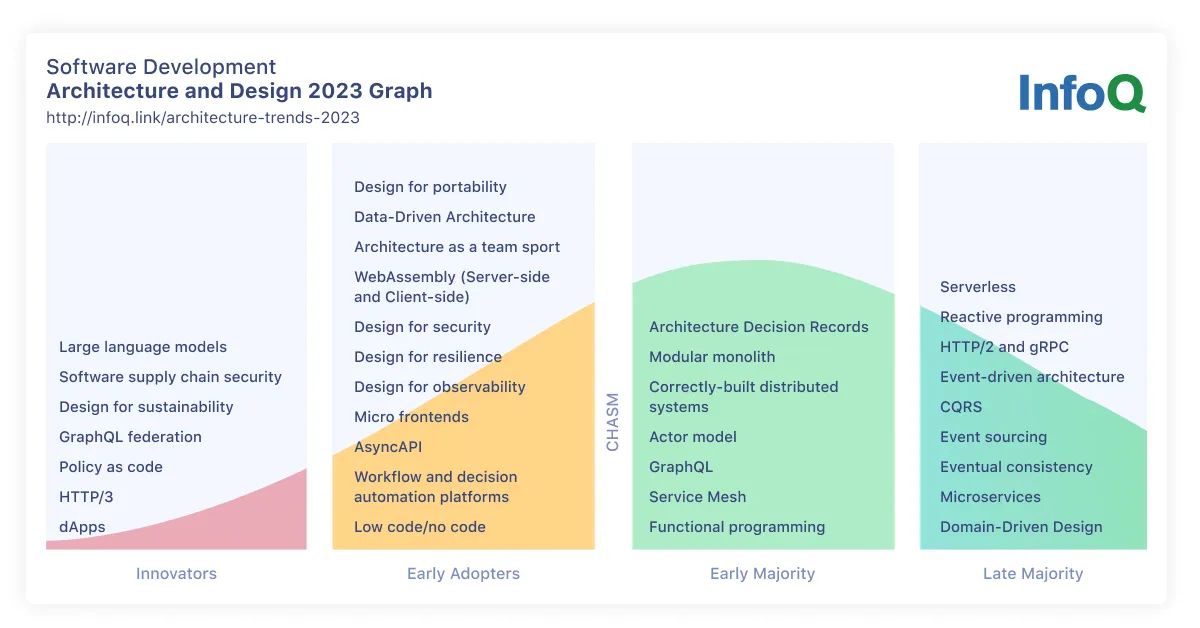

To remedy this situation, a new pattern, the modular monolith, arised and gained traction for a couple of years now. In fact, according to Spring 2023 InfoQ report, those who do not embrace it yet are considered late on the trail…

Paradigm

Underlying idea is to operate a clear split between the way software is composed, from the way it is deployed. The way we chunk software into modules, fragment, staples, whatever the taxonomy we pick should not be correlated to the way those chunks will be assembled or deployed downstream. We should not make upstream assumptions regarding those aspects, because they may introduce more issues or biases than they planned to solve at the beginning.

It is also the approach promoted by

C4 model, where model views are uncoupled from deployment ones. You should discuss a model in an abstract way without being noised by the deployment dimension. Relationship between two actors of the ecosystem has to be obeyed, wherever those actors land. This relationship can be improved or degraded in terms of performance, security, … but we should not be exposed to those dimensions while discussing the coupling between those actors.

At this stage, you should think that it looks - a lot - like micro-services pattern. And you are right. But where the approaches differ is regarding the fact that the weaving stage is considered not only as a first-class citizen here but as a building block. Therefore, it implies you will have to leverage a structuring backbone to build upon and offload assembly and deployment facilities to. It is no more only standards (REST, gRPC, …) to obey and later figuring out how to efficiently assemble them.

Looking at existing frameworks is often a good indicator to gauge a pattern maturity. When engaging a new paradigm, leveraging an existing framework instead of acting from scratch is often a good indicator of team maturity.

Model View View Model pattern aka MVVM is a well-known pattern and there is a lot of existing frameworks around for a lot of stacks for those who want to apply it. You are likely to find your own good fit. And apart for seasoned MVVM developers who need very specific usage, it makes literally non sense to start creating a brand-new one.

Both Proto Actor and Orleans are two actor models frameworks which accomodate very well the modular approach, letting developer code in an agnostic fashion and seamlessly enabling scaling.

Service Weaver is a relatively new player in the game but it strenghtens fact that another big company sees interested in that pattern.

| Framework | Author | Technology |

|---|---|---|

| Proto Actor | Asynkron | .NET, Go, gRPC, protobuf |

| Orleans | Microsoft | .NET |

| Service Weaver | Go |

Split

Software should conciliate Agile delivery and requirement evolution.

Modular monolith paradigm encourages to materialize software dimensions, which then can be independently operated.

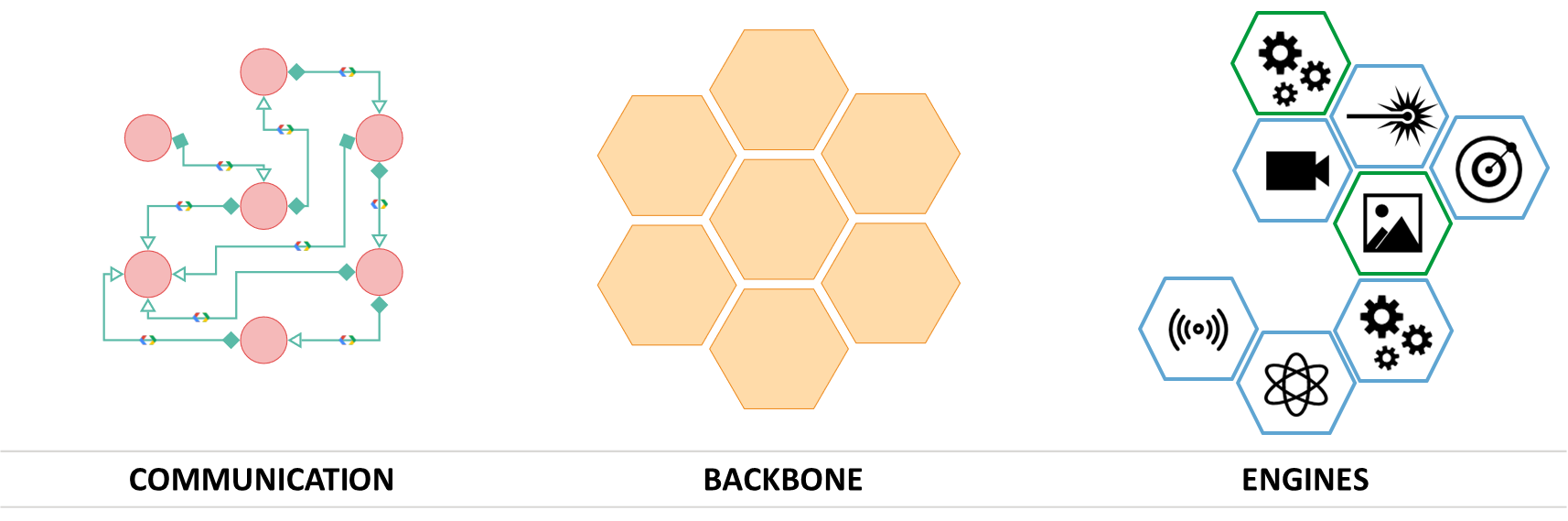

Here we can see a common 3 dimensions split:

- Communication: the underlying communication plumbering

- Backbone: acting as place holders for engines

- Engines: the hosted processing aka engines

Each of these parts is fully isolated, allowing one to mix and match to accommodate customer use cases, e.g.,:

- customer with a legacy communication system

- customer without GPU capacity

Agnosticity

Coupling and maintenance must be optimized.

Modular monolith paradigm fosters thinking software in term of encapsulation and materializing (virtual) layers accordingly. By doing so, you gain the ability to locally leverage acknowledged standards or best practices while increasing overall agnosticity.

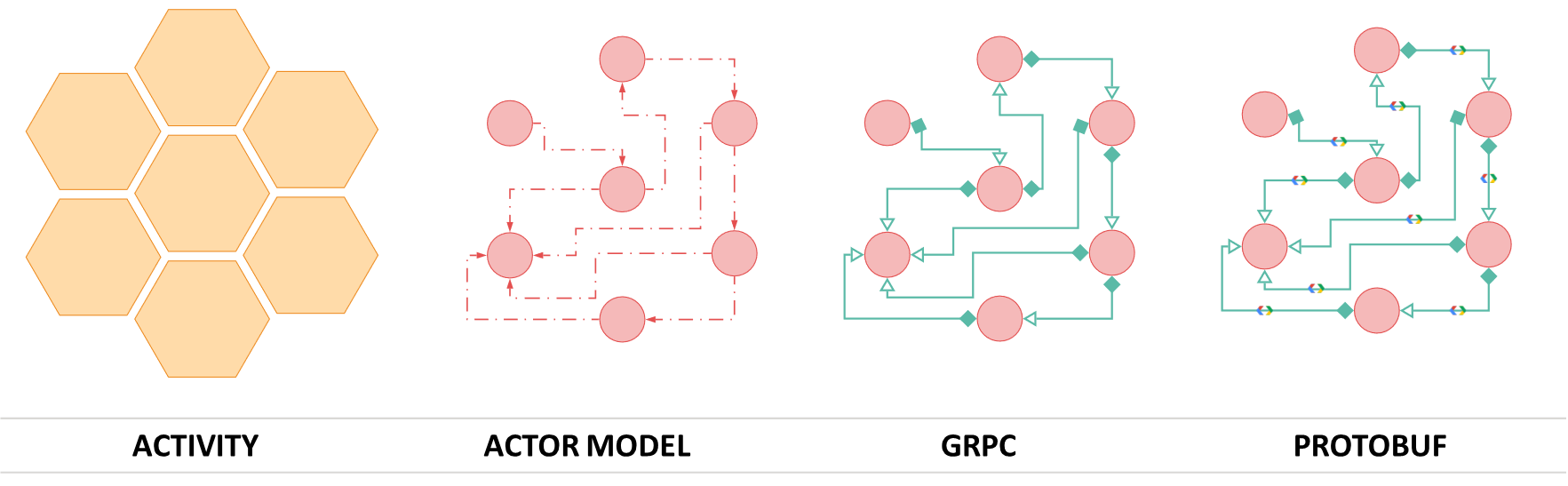

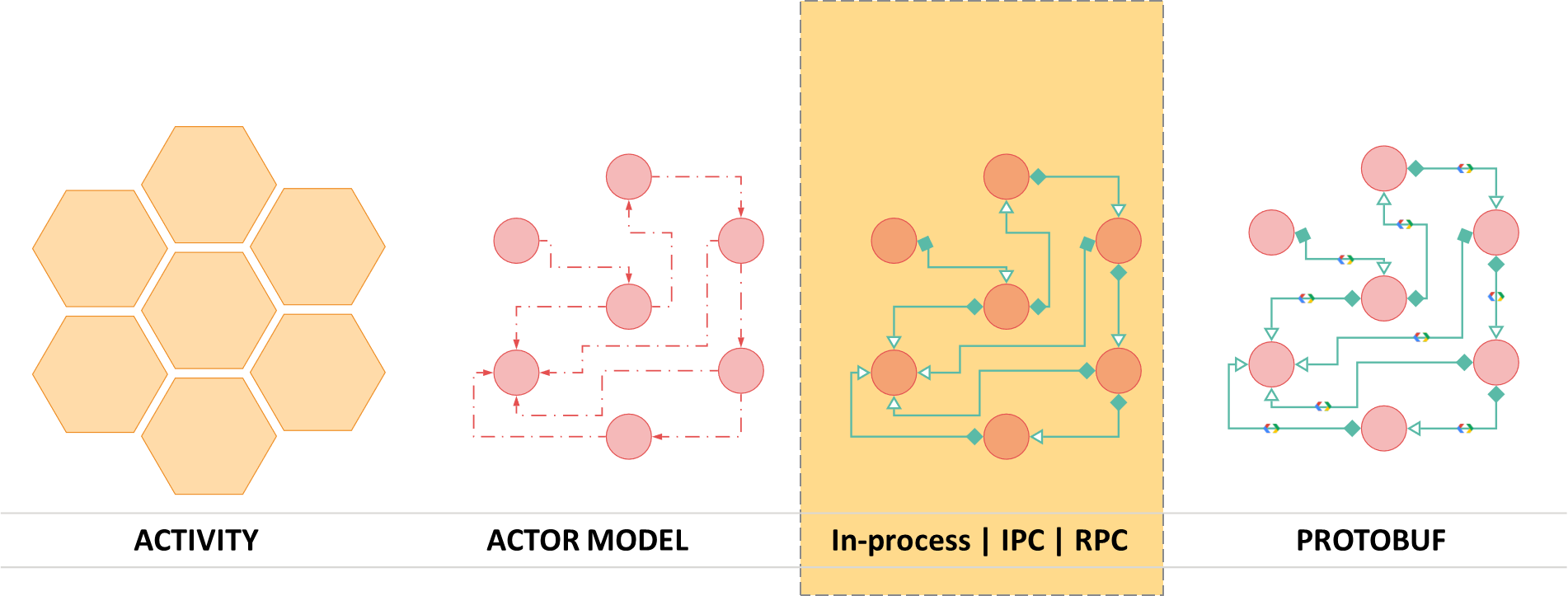

Here we end up with a 4 layers setup:

- Activity to manage lifecycle

- Actor model to organize activities interaction and leverage built-in supervision and scaling paradigms

- Grpc as communication channel to leverage an acknowledged & robust framework

- Protobuf IDL as message contracts to ease sharing and extensibility

Choices we made within a given layers is scoped to this particular layer, meaning it does not leak any information, that may introduce biases decision upstream or downstream, apart from its public API.

Smart

Stack should be contextually deployed and operated, meaning infrastructure insights act as drivers to pick the most efficient shape.

Assuming we start from the previous stack and focus on the communication layer. We can decide to tweak this layer in multiple ways and decide which combination we support or not. Once again, Modular monolith paradigm supports this approach and some frameworks even perform all the heavy lifting for us.

Call

Moving from in-process calls -> inter-process calls -> remote process calls is a matter of configuration. It does not change neither the relationships we define between the modules nor the way they are triggered. The switch is simply operated in another dimension, taking into account the infrastructure we deployed on and making the smartest decision.

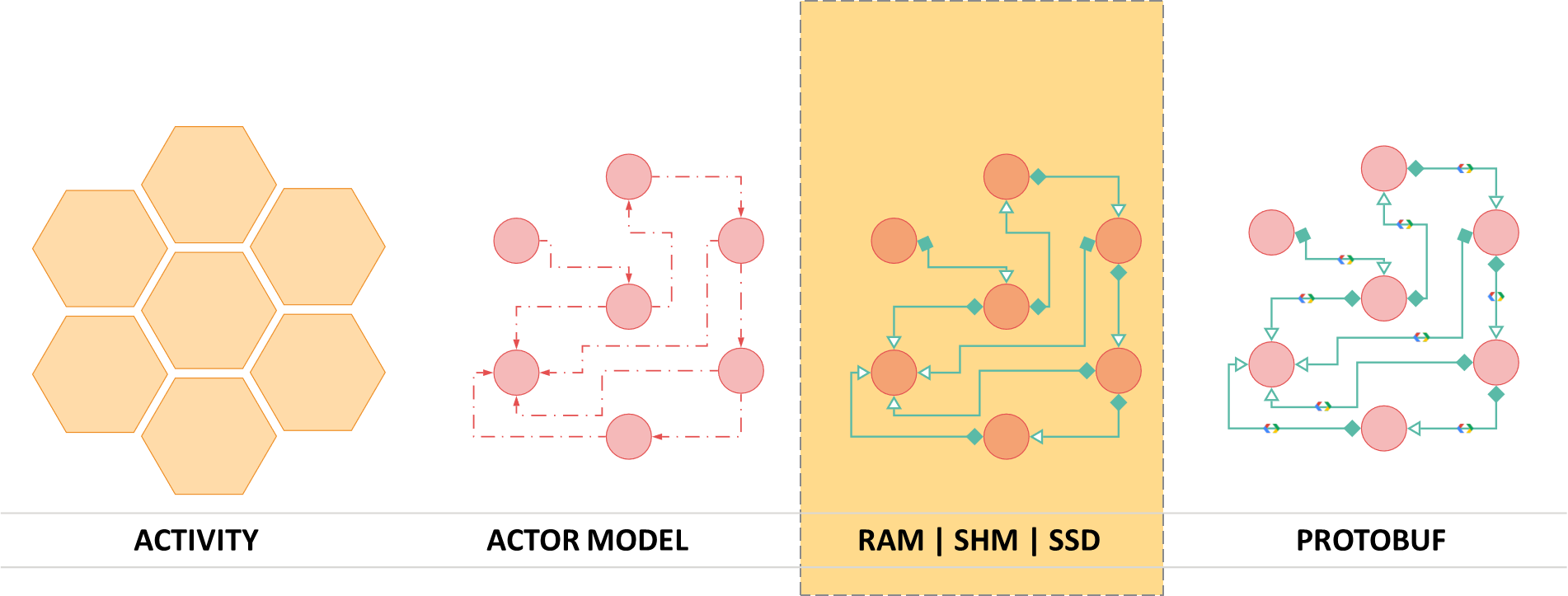

Exchange

Same goes for data exchange. They can occur in RAM, through the Shared-Memory or even require disk I/O operation. From the outside it only means it will vary from super fast to slow. No more. Contract remains unchanged.

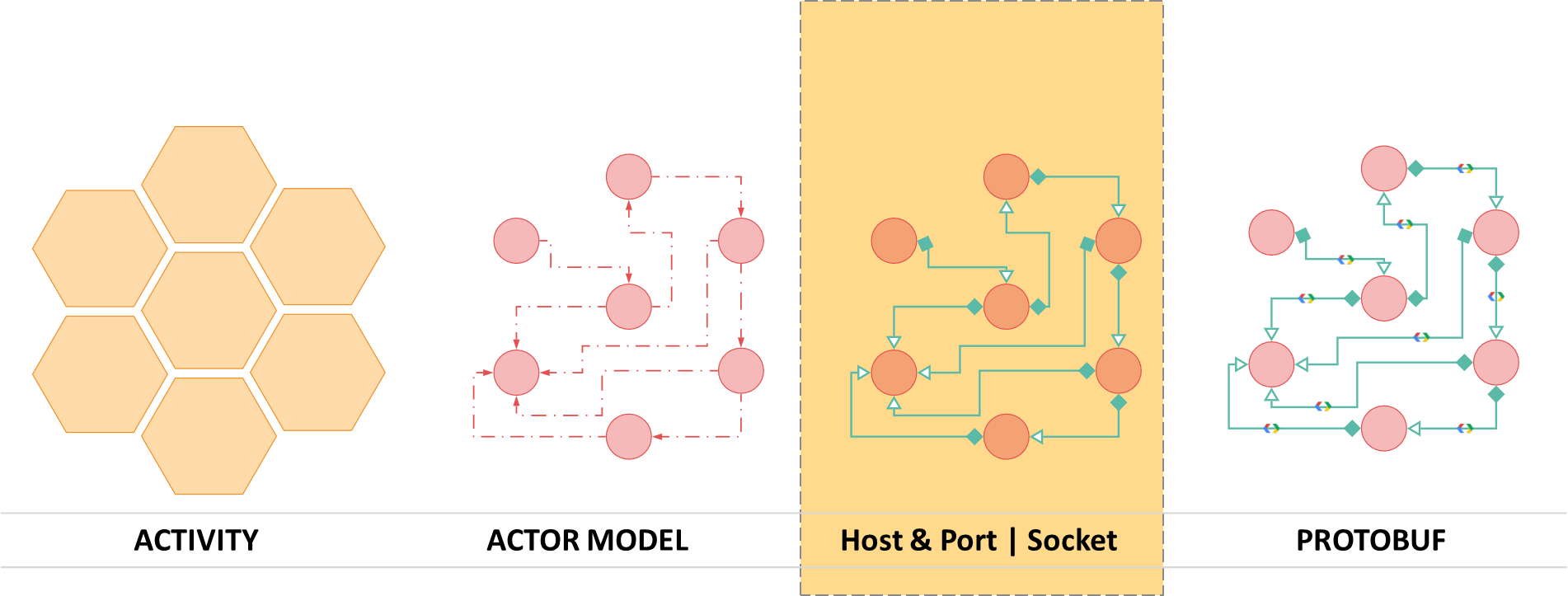

Protocol

Last but not least we can contextually improve the communication protocol. Why should we pay the penalty of leveraging host & port tuple allocation when deploying multiple endpoints locally? In this case one could leverage socket instead, smoothing deployment (no issue with duplicate allocation, no more firewall rule to setup to open port, no VPN issue, …).

Testing

Performance is the crux of massive simulation.

To move towards this target, Modular monolith paradigm promotes instrumentation and mocks usage.

Here we can see 4 main categories of tests, namely:

- Consistency: one can ensure that a bunch of activities are properly connected

- Workflow: given a bunch of activities, we can exercise the bunch with empty processing and messages to assess activities interaction

- Load: we feed activities with smart stubs, faking inner processing time, data load or frequency discrepancy, and once again we exercise to measure impacts and surface metrics.

- Trace: we have the ability to dynamically instrument communication pipeline. One could then diagnose issue by surfacing verbose logs. Another could alter message shapes to encrypt them or to enrich them (e.g., adding wall clock time)

Location transparency

End-users wish for efficient and straightforward UX.

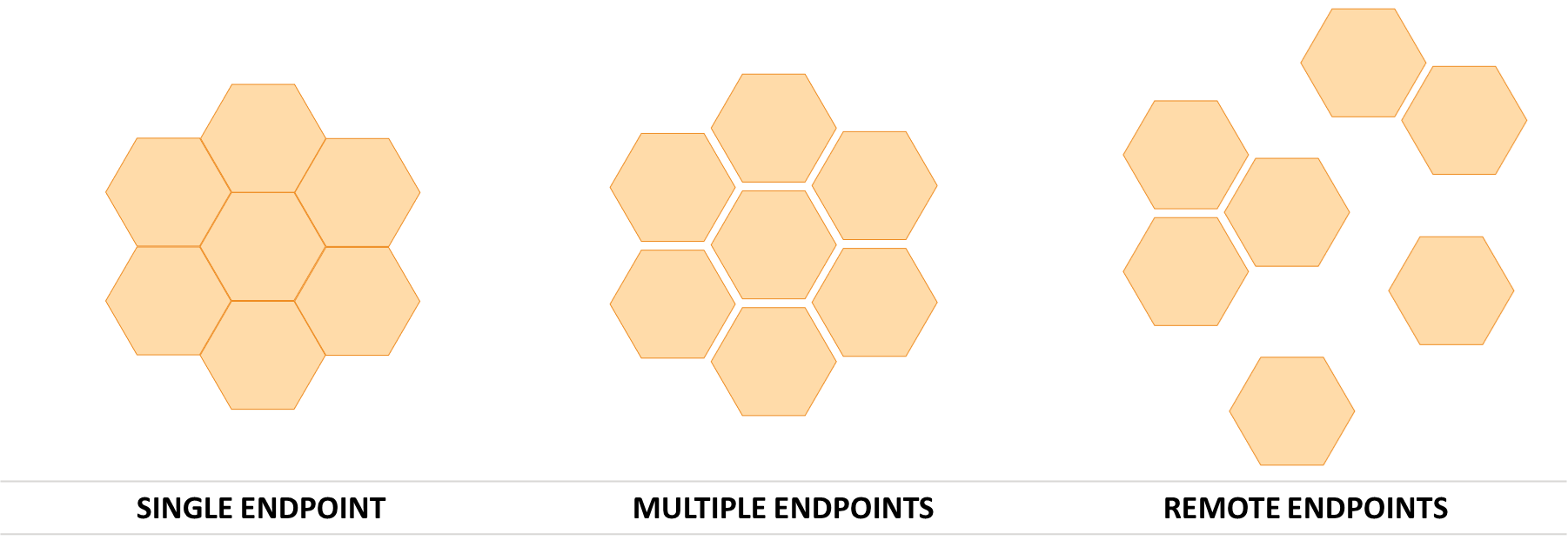

Leveraging modular monolith paradigm one can shape the entire workflow to seamlessly accommodate scaling.

The whole backbone can accommodate vertical and horizontal scaling with a matter of configuration.

Unit of deployment shape can thus be tailored to address customer needs e.g., per use case, per licensing, per hardware capabilities, …

One can thus seamlessly moving from a single endpoint deployment to a multiple endpoints’ ones to a remote one.

Key point here is that is pure infrastructure consideration and do not impact neither the activities tangling nor the hosted engines.

Said differently, it is a deployment aspect and thus it is clustered at this stage, not upstream.